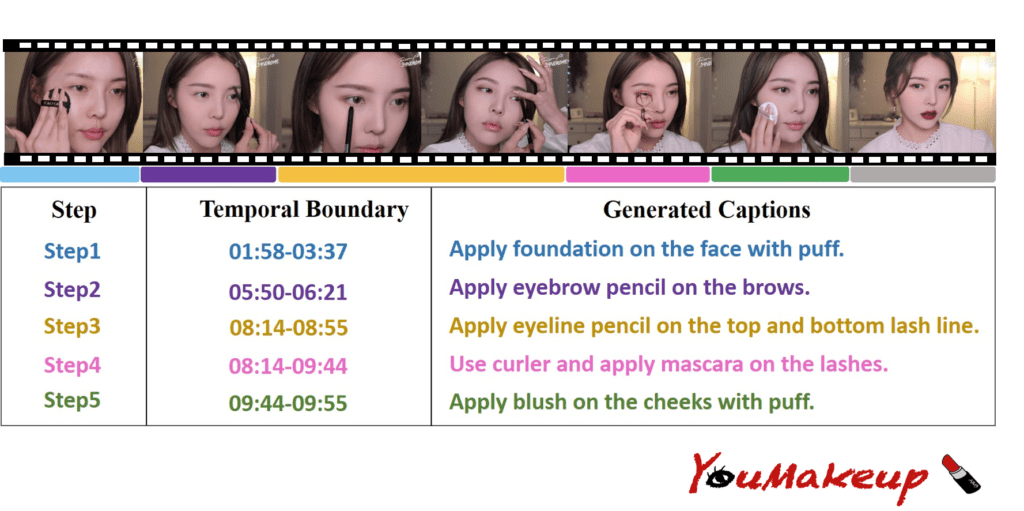

Inputs: An untrimmed make-up video ranging from 15s to 1h.

Outputs: The temporal boundary and the generated description of detected make-up events in the video.

Prizes

- 1st prize: ¥ 10,000

- 2nd prize: ¥ 3,000

- 3rd prize: ¥ 2,000

Schedule (Beijing, UTC+8)

- April 25th, 2022 – Training / validation set released

- June 10th, 2022 – Testing set released and submission opened

- June 25th, 2022 – Submission deadline

- June 26th-30th, 2022 – Objective evaluation

- July 1st, 2022 – Evaluation results announce

- July 6th, 2022 – Paper submission deadline

Submission Details

Results should be stored in results.json, following this format:

{

'video_id': [

{

'sentence': sent,

'timestamp': [st_time, ed_time],

}, ...

],

}

Teams can submit the results.json once daily. The evaluation process might be time-consuming, but a failed submission won’t affect the number of submission chances.

Evaluation Metrics

The challenge assesses both the localization and captioning abilities of models. For localization, we compute the average precision (AP) across tIoU thresholds of {0.3,0.5,0.7,0.9}. For dense captioning, we measure BLEU4, METEOR, and CIDEr for matched pairs between generated captions and the ground truth across tIoU thresholds of {0.3, 0.5, 0.7, 0.9}.

About the Dataset

Makeup instructional videos inherently possess a finer granularity than open-domain videos. While many steps might share similar backgrounds, they showcase subtle yet essential differences. This includes particular actions, tools, and facial areas applied, which can produce distinct facial effects.

The YouMakeup dataset, sourced from YouTube, encompasses 2,800 makeup instructional videos, amounting to over 420 hours. Every video is annotated with a series of steps, with details like temporal boundaries, highlighted facial areas, and step-by-step descriptions. In total, there are 30,626 steps, with each video having an average of 10.9 steps. Videos typically range from 15s to 1h, averaging 9 minutes in length.

YouMakeup Dataset Overview

| Dataset | Total | Train | Val | Test | Video_len |

|---|---|---|---|---|---|

| YouMakeup | 2,800 | 1,680 | 280 | 840 | 15s-1h |