LLaVA is an open-source project that aims to build large multimodal models with capabilities approaching GPT-4 in both vision and language understanding. The key idea is to use language-only GPT-4 to generate visual instruction tuning data, and use this data to teach the multimodal model to follow instructions across vision and language.

Dataset

The core dataset for LLaVA is the LLaVA Visual Instruct 150K dataset. This is a collection of 158K multimodal instruction-following examples generated by interacting with GPT-4. It consists of 3 subsets:

- Conversation (58K): Dialogues about images with conversational instructions and responses

- Detailed Description (23K): Detailed visual descriptions of images by GPT-4

- Complex Reasoning (77K): Answers by GPT-4 to harder reasoning questions about images

Some key details about the dataset:

- Created in April 2023 by prompting GPT-4 API

- Available under CC BY-NC 4.0 license

- Primary intended use is research on multimodal models

- Send questions/comments to the GitHub issues page

Model

LLaVA connects a visual encoder (CLIP ViT-L/14) with a large language model (Vicuna). It is trained in two stages:

- Feature alignment pretraining: Align visual and text features using a subset of CC3M data

# Pretrain (feature alignment) cc3m_subset_path = "/path/to/cc3m/subset" from transformers import LLaVaForConditionalGeneration model = LLaVaForConditionalGeneration.from_pretrained("liuhaotian/llava-llama-2-13b-v0") model.config.decoder_start_token_id = model.config.pad_token_id model.resize_token_embeddings(len(model.config.decoder.vocab)) model.config.img_size = 336 model.config.prompt_version = "v1" model.parallelize() model.requires_grad_(model.visual, False) model.requires_grad_(model.text_model, False) model.requires_grad_(model.visual_projection, True) optimizer = create_optimizer(model) num_epochs = 1 model.train() for epoch in range(num_epochs): for batch in dataloader: # forward pass loss = model(**batch, output_hidden_states=True).loss # backward pass loss.backward() # update parameters optimizer.step() optimizer.zero_grad() - Visual instruction tuning: Fine-tune end-to-end on LLaVA Visual Instruct 150K

# Visual Instruction Tuning instruct_data_path = "/path/to/llava/instruct/data" from transformers import LLaVaForConditionalGeneration model = LLaVaForConditionalGeneration.from_pretrained("liuhaotian/llava-llama-2-13b-v0") model.parallelize() model.requires_grad_(True) optimizer = create_optimizer(model) num_epochs = 3 model.train() for epoch in range(num_epochs): for batch in dataloader: # forward pass loss = model(**batch, output_hidden_states=True).loss # backward pass loss.backward() # update parameters optimizer.step() optimizer.zero_grad()

This allows LLaVA to demonstrate impressive vision-language capabilities.

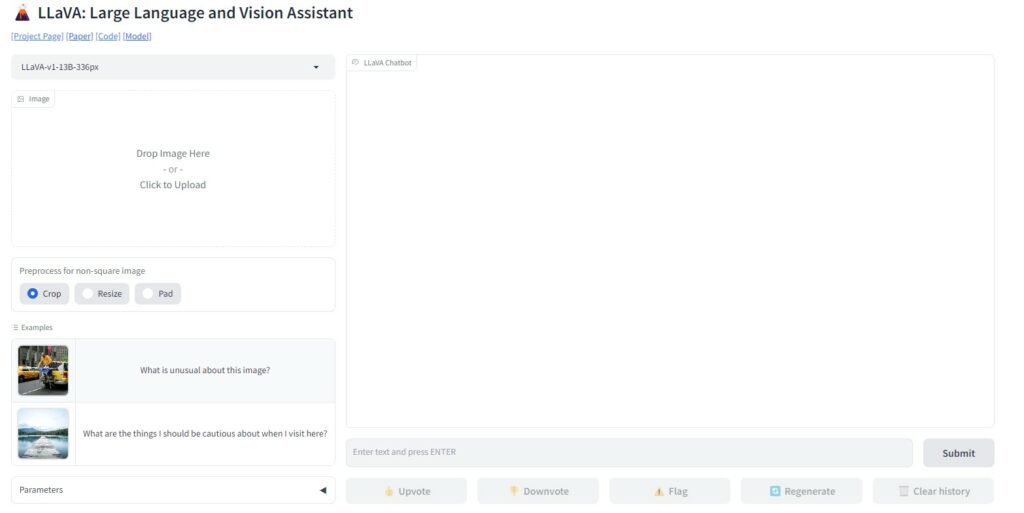

Code and Usage

The LLaVA codebase provides implementations for training, evaluation and serving the models:

- Train scripts for pretraining and finetuning

# Pretraining script !python run_pretraining.py \ --model_name_or_path "liuhaotian/llava-llama-2-13b-v0" \ --train_file "/path/to/cc3m_subset/train.jsonl" \ --output_dir "/path/to/checkpoints" \ --img_size 336 \ --prompt_version "v1" \ --max_length 2048 \ --per_device_train_batch_size 16 \ --gradient_accumulation_steps 8 \ --num_train_epochs 1 \ --fp16 \ --lr 2e-3 \ --no_lr_decay \ --lr_scheduler_type constant \ --lr_warmup_steps 0 # Finetuning script !python run_finetuning.py \ --model_name_or_path "/path/to/pretrained_llava" \ --train_file "/path/to/instruct_data/train.json" \ --output_dir "/path/to/finetuned_ckpt" \ --img_size 336 \ --prompt_version "v1" \ --max_length 2048 \ --per_device_train_batch_size 4 \ --gradient_accumulation_steps 8 \ --num_train_epochs 3 \ --fp16 \ --lr 2e-5 \ --no_lr_decay \ --lr_scheduler_type constant \ --lr_warmup_steps 0 - Serve modules for launching web demos, CLI, and APIs

# Launch web UI !python -m llava.serve.controller --host 0.0.0.0 --port 10000 !python -m llava.serve.gradio_web_server --controller http://localhost:10000 # Launch model server !python -m llava.serve.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port 40000 --worker http://localhost:40000 --model-path ./checkpoints/llava-13b-v0 # CLI usage !python -m llava.serve.cli --model-path ./checkpoints/llava-13b-v0 --image-file "./image.png" - Eval scripts for model evaluation using GPT-4

# Generate responses !python model_vqa.py --model-path ./checkpoints/llava-13b-v0 --question-file questions.jsonl --image-folder coco_images --answers-file answers-llava.jsonl # Evaluate responses !python eval_gpt_review_visual.py --question questions.jsonl --context context.jsonl --answer-list answers-gpt4.jsonl answers-llava.jsonl --rule rules.json --output review.json # Summarize results !python summarize_gpt_review.py

It also includes utilities for quantization, multi-GPU support etc.

Key links:

Conclusion

In summary, LLaVA demonstrates how visual instruction tuning data generated by GPT-4 can help train large multimodal models to achieve impressive vision-language abilities. The dataset, codebase and models enable further research into building models that approach capabilities like multimodal GPT-4.